This story originally appeared in The Algorithm, our weekly newsletter on AI. To get stories like this in your inbox first, sign up here.

I’m standing in the parking lot of an apartment building in East London, near where I live. It’s a cloudy day, and nothing seems out of the ordinary.

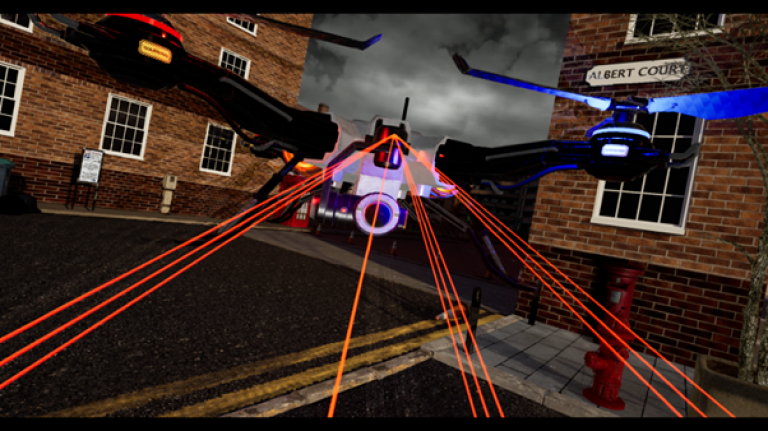

A small drone descends from the skies and hovers in front of my face. A voice echoes from the drone’s speakers. The police are conducting routine checks in the neighborhood.

I feel as if the drone’s camera is drilling into me. I try to turn my back to it, but the drone follows me like a heat-seeking missile. It asks me to please put my hands up, and scans my face and body. Scan completed, it leaves me alone, saying there’s an emergency elsewhere.

I got lucky—my encounter was with a drone in virtual reality as part of an experiment by a team from University College London and the London School of Economics. They’re studying how people react when meeting police drones, and whether they come away feeling more or less trusting of the police.

It seems obvious that encounters with police drones might not be pleasant. But police departments are adopting these sorts of technologies without even trying to find out.

“Nobody is even asking the question: Is this technology going to do more harm than good?” says Aziz Huq, a law professor at the University of Chicago, who is not involved in the research.

The researchers are interested in finding out if the public is willing to accept this new technology, explains Krisztián Pósch, a lecturer in crime science at UCL. People can hardly be expected to like an aggressive, rude drone. But the researchers want to know if there is any scenario where drones would be acceptable. For example, they are curious whether an automated drone or a human-operated one would be more tolerable.

If the reaction is negative across the board, the big question is whether these drones are effective tools for policing in the first place, Pósch says.

“The companies that are producing drones have an interest in saying that [the drones] are working and they are helping, but because no one has assessed it, it is very difficult to say [if they are right],” he says.

It’s important because police departments are racing way ahead and starting to use drones anyway, for everything from surveillance and intelligence gathering to chasing criminals.

Last week, San Francisco approved the use of robots, including drones that can kill people in certain emergencies, such as when dealing with a mass shooter. In the UK most police drones have thermal cameras that can be used to detect how many people are inside houses, says Pósch. This has been used for all sorts of things: catching human traffickers or rogue landlords, and even targeting people holding suspected parties during covid-19 lockdowns.

Virtual reality will let the researchers test the technology in a controlled, safe way among lots of test subjects, Pósch says.

Even though I knew I was in a VR environment, I found the encounter with the drone unnerving. My opinion of these drones did not improve, even though I’d met a supposedly polite, human-operated one (there are even more aggressive modes for the experiment, which I did not experience.)

Ultimately, it may not make much difference whether drones are “polite” or “rude” , says Christian Enemark, a professor at the University of Southampton, who specializes in the ethics of war and drones and is not involved in the research. That's because the use of drones itself is a “reminder that the police are not here, whether they’re not bothering to be here or they’re too afraid to be here,” he says.

“So maybe there’s something fundamentally disrespectful about any encounter.”

Deeper Learning

GPT-4 is coming, but OpenAI is still fixing GPT-3

The internet is abuzz with excitement about AI lab OpenAI’s latest iteration of its famous large language model, GPT-3. The latest demo, ChatGPT, answers people’s questions via back-and-forth dialogue. Since its launch last Wednesday, the demo has crossed over 1 million users. Read Will Douglas Heaven’s story here.

GPT-3 is a confident bullshitter and can easily be prompted to say toxic things. OpenAI says it has fixed a lot of these problems with ChatGPT, which answers follow-up questions, admits its mistakes, challenges incorrect premises, and rejects inappropriate requests. It even refuses to answer some questions, such as how to be evil, or how to break into someone’s house.

But it didn’t take long for people to find ways to bypass OpenAI’s content filters. By asking the model to only pretend to be evil, pretend to break into someone’s house, or write code to check if someone would be a good scientist based on their race and gender, people can get the model to spew harmful stereotypes or provide instructions on how to break the law.

Bits and Bytes

Biotech labs are using AI inspired by DALL-E to invent new drugs

Two labs, startup Generate Biomedicines and a team at the University of Washington, separately announced programs that use diffusion models—the AI technique behind the latest generation of text-to-image AI—to generate designs for novel proteins with more precision than ever before. (MIT Technology Review)

The collapse of Sam Bankman-Fried’s crypto empire is bad news for AI

The disgraced crypto kingpin shoveled millions of dollars into research on “AI safety,” which aims to mitigate the potential dangers of artificial intelligence. Now some who received funding fear Bankman-Fried’s downfall could ruin their work. They may not receive the full amount of money promised, or could even be drawn into bankruptcy investigations. (The New York Times)

Effective altruism is pushing a dangerous brand of “AI safety”

Effective altruism is a movement whose believers say they want to have the best impact on the world in the most quantifiable way. Many of them also believe the most effective way of saving the world is coming up with ways to make AI safer in order to avert any threat to humanity from a superintelligent AI. Google’s former ethical AI lead Timnit Gebru says this ideology drives an AI research agenda that creates harmful systems in the name of saving humanity. (Wired)

Someone trained an AI chatbot on her childhood diaries

Michelle Huang, a coder and artist, wanted to simulate having conversations with her younger self, so she fed entries from her childhood diaries to the chatbot and had it reply to her questions. The results are really touching.

The EU threw a €387,000 party in the metaverse. Almost nobody showed up.

The party, hosted by the EU’s executive arm, was supposed to get young people excited about the organization’s foreign policy efforts. Only five people attended. (Politico)

Deep Dive

Artificial intelligence

How to opt out of Meta’s AI training

Your posts are a gold mine, especially as companies start to run out of AI training data.

Why does AI hallucinate?

The tendency to make things up is holding chatbots back. But that’s just what they do.

Apple is promising personalized AI in a private cloud. Here’s how that will work.

Apple’s first big salvo in the AI wars makes a bet that people will care about data privacy when automating tasks.

This AI-powered “black box” could make surgery safer

A new smart monitoring system could help doctors avoid mistakes—but it’s also alarming some surgeons and leading to sabotage.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.