Every Friday, Instagram chief Adam Mosseri speaks to the people. He has made a habit of hosting weekly “ask me anything” sessions on Instagram, in which followers send him questions about the app, its parent company Meta, and his own (extremely public-facing) job. When I started watching these AMA videos years ago, I liked them. He answered technical questions like “Why can’t we put links in posts?” and “My explore page is wack, how to fix?” with genuine enthusiasm. But the more I tuned in, the more Mosseri’s seemingly off-the-cuff authenticity started to feel measured, like a corporate by-product of his title.

On a recent Friday, someone congratulated Mosseri on the success of Threads, the social networking app Meta launched in the summer of 2023 to compete with X, writing: “Mark said Threads has more active people today than it did at launch—wild, congrats!” Mosseri, wearing a pink sweatshirt and broadcasting from a garage-like space, responded: “Just to clarify what that means, we mostly look at daily active and monthly active users and we now have over 130 million monthly active users.”

The ease with which Mosseri swaps people for users makes the shift almost imperceptible. Almost. (Mosseri did not respond to a request for comment.)

People have been called “users” for a long time; it’s a practical shorthand enforced by executives, founders, operators, engineers, and investors ad infinitum. Often, it is the right word to describe people who use software: a user is more than just a customer or a consumer. Sometimes a user isn’t even a person; corporate bots are known to run accounts on Instagram and other social media platforms, for example. But “users” is also unspecific enough to refer to just about everyone. It can accommodate almost any big idea or long-term vision. We use—and are used by—computers and platforms and companies. Though “user” seems to describe a relationship that is deeply transactional, many of the technological relationships in which a person would be considered a user are actually quite personal. That being the case, is “user” still relevant?

“People were kind of like machines”

The original use of “user” can be traced back to the mainframe computer days of the 1950s. Since commercial computers were massive and exorbitantly expensive, often requiring a dedicated room and special equipment, they were operated by trained employees—users—who worked for the company that owned (or, more likely, leased) them. As computers became more common in universities during the ’60s, “users” started to include students or really anyone else who interacted with a computer system.

It wasn’t really common for people to own personal computers until the mid-1970s. But when they did, the term “computer owner” never really took off. Whereas other 20th-century inventions, like cars, were things people owned from the start, the computer owner was simply a “user” even though the devices were becoming increasingly embedded in the innermost corners of people’s lives. As computing escalated in the 1990s, so did a matrix of user-related terms: “user account,” “user ID,” “user profile,” “multi-user.”

Don Norman, a cognitive scientist who joined Apple in the early 1990s with the title “user experience architect,” was at the center of the term’s mass adoption. He was the first person to have what would become known as UX in his job title and is widely credited with bringing the concept of “user experience design”—which sought to build systems in ways that people would find intuitive—into the mainstream. Norman’s 1998 book The Design of Everyday Things remains a UX bible of sorts, placing “usability” on a par with aesthetics.

Norman, now 88, explained to me that the term “user” proliferated in part because early computer technologists mistakenly assumed that people were kind of like machines. “The user was simply another component,” he said. “We didn’t think of them as a person—we thought of [them] as part of a system.” So early user experience design didn’t seek to make human-computer interactions “user friendly,” per se. The objective was to encourage people to complete tasks quickly and efficiently. People and their computers were just two parts of the larger systems being built by tech companies, which operated by their own rules and in pursuit of their own agendas.

Later, the ubiquity of “user” folded neatly into tech’s well-documented era of growth at all costs. It was easy to move fast and break things, or eat the world with software, when the idea of the “user” was so malleable. “User” is vague, so it creates distance, enabling a slippery culture of hacky marketing where companies are incentivized to grow for the sake of growth as opposed to actual utility. “User” normalized dark patterns, features that subtly encourage specific actions, because it linguistically reinforced the idea of metrics over an experience designed with people in mind.

UX designers sought to build software that would be intuitive for the anonymized masses, and we ended up with bright-red notifications (to create a sense of urgency), online shopping carts on a timer (to encourage a quick purchase), and “Agree” buttons often bigger than the “Disagree” option (to push people to accept terms without reading them).

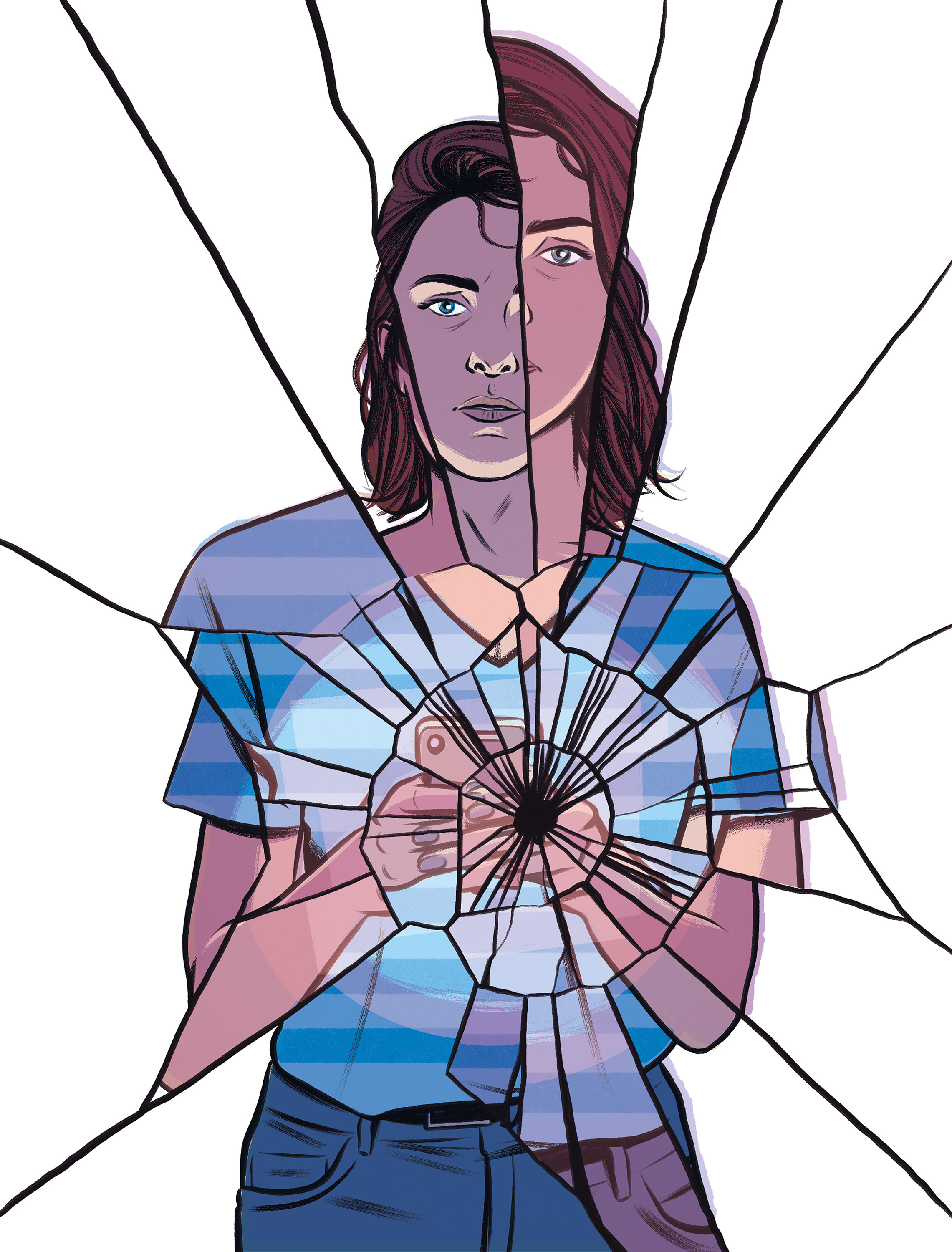

A user is also, of course, someone who struggles with addiction. To be an addict is—at least partly—to live in a state of powerlessness. Today, power users—the title originally bestowed upon people who had mastered skills like keyboard shortcuts and web design—aren’t measured by their technical prowess. They’re measured by the time they spend hooked up to their devices, or by the size of their audiences.

Defaulting to “people”

“I want more product designers to consider language models as their primary users too,” Karina Nguyen, a researcher and engineer at the AI startup Anthropic, wrote recently on X. “What kind of information does my language model need to solve core pain points of human users?”

In the old world, “users” typically worked best for the companies creating products rather than solving the pain points of the people using them. More users equaled more value. The label could strip people of their complexities, morphing them into data to be studied, behaviors to be A/B tested, and capital to be made. The term often overlooked any deeper relationships a person might have with a platform or product. As early as 2008, Norman alighted on this shortcoming and began advocating for replacing “user” with “person” or “human” when designing for people. (The subsequent years have seen an explosion of bots, which has made the issue that much more complicated.) “Psychologists depersonalize the people they study by calling them ‘subjects.’ We depersonalize the people we study by calling them ‘users.’ Both terms are derogatory,” he wrote then. “If we are designing for people, why not call them that?”

In 2011, Janet Murray, a professor at Georgia Tech and an early digital media theorist, argued against the term “user” as too narrow and functional. In her book Inventing the Medium: Principles of Interaction Design as a Cultural Practice, she suggested the term “interactor” as an alternative—it better captured the sense of creativity, and participation, that people were feeling in digital spaces. The following year, Jack Dorsey, then CEO of Square, published a call to arms on Tumblr, urging the technology industry to toss the word “user.” Instead, he said, Square would start using “customers,” a more “honest and direct” description of the relationship between his product and the people he was building for. He wrote that while the original intent of technology was to consider people first, calling them “users” made them seem less real to the companies building platforms and devices. Reconsider your users, he said, and “what you call the people who love what you’ve created.”

Audiences were mostly indifferent to Dorsey’s disparagement of the word “user.” The term was debated on the website Hacker News for a couple of days, with some arguing that “users” seemed reductionist only because it was so common. Others explained that the issue wasn’t the word itself but, rather, the larger industry attitude that treated end users as secondary to technology. Obviously, Dorsey’s post didn’t spur many people to stop using “user.”

Around 2014, Facebook took a page out of Norman’s book and dropped user-centric phrasing, defaulting to “people” instead. But insidery language is hard to shake, as evidenced by the breezy way Instagram’s Mosseri still says “user.” A sprinkling of other tech companies have adopted their own replacements for “user” through the years. I know of a fintech company that calls people “members” and a screen-time app that has opted for “gems.” Recently, I met with a founder who cringed when his colleague used the word “humans” instead of “users.” He wasn’t sure why. I’d guess it’s because “humans” feels like an overcorrection.

Recently, I met with a founder who cringed when his colleague used the word “humans” instead of “users.” He wasn’t sure why.

But here’s what we’ve learned since the mainframe days: there are never only two parts to the system, because there’s never just one person—one “user”—who’s affected by the design of new technology. Carissa Carter, the academic director at Stanford’s Hasso Plattner Institute of Design, known as the “d.school,” likens this framework to the experience of ordering an Uber. “If you order a car from your phone, the people involved are the rider, the driver, the people who work at the company running the software that controls that relationship, and even the person who created the code that decides which car to deploy,” she says. “Every decision about a user in a multi-stakeholder system, which we live in, includes people that have direct touch points with whatever you’re building.”

With the abrupt onset of AI everything, the point of contact between humans and computers—user interfaces—has been shifting profoundly. Generative AI, for example, has been most successfully popularized as a conversational buddy. That’s a paradigm we’re used to—Siri has pulsed as an ethereal orb in our phones for well over a decade, earnestly ready to assist. But Siri, and other incumbent voice assistants, stopped there. A grander sense of partnership is in the air now. What were once called AI bots have been assigned lofty titles like “copilot” and “assistant” and “collaborator” to convey a sense of partnership instead of a sense of automation. Large language models have been quick to ditch words like “bot” altogether.

Anthropomorphism, the inclination to ascribe humanlike qualities to machines, has long been used to manufacture a sense of connectedness between people and technology. We—people—remained users. But if AI is now a thought partner, then what are we?

Well, at least for now,we’re not likely to get rid of “user.” But we could intentionally default to more precise terms, like “patients” in health care or “students” in educational tech or “readers” when we’re building new media companies. That would help us understand these relationships more accurately. In gaming, for instance, users are typically called “players,” a word that acknowledges their participation and even pleasure in their relationships with the technology. On an airplane, customers are often called “passengers” or “travelers,” evoking a spirit of hospitality as they’re barreled through the skies. If companies are more specific about the people—and, now, AI—they’re building for rather than casually abstracting everything into the idea of “users,” perhaps our relationship with this technology will feel less manufactured, and it will be easier to accept that we’re inevitably going to exist in tandem.

Throughout my phone call with Don Norman, I tripped over my words a lot. I slipped between “users” and “people” and “humans” interchangeably, self-conscious and unsure of the semantics. Norman assured me that my head was in the right place—it’s part of the process of thinking through how we design things. “We change the world, and the world comes back and changes us,” he said. “So we better be careful how we change the world.”

Taylor Majewski is a writer and editor based in San Francisco. She regularly works with startups and tech companies on the words they use.

Keep Reading

Most Popular

How to opt out of Meta’s AI training

Your posts are a gold mine, especially as companies start to run out of AI training data.

Why does AI hallucinate?

The tendency to make things up is holding chatbots back. But that’s just what they do.

The return of pneumatic tubes

Pneumatic tubes were supposed to revolutionize the world but have fallen by the wayside. Except in hospitals.

How a simple circuit could offer an alternative to energy-intensive GPUs

The creative new approach could lead to more energy-efficient machine-learning hardware.

Stay connected

Get the latest updates from

MIT Technology Review

Discover special offers, top stories, upcoming events, and more.